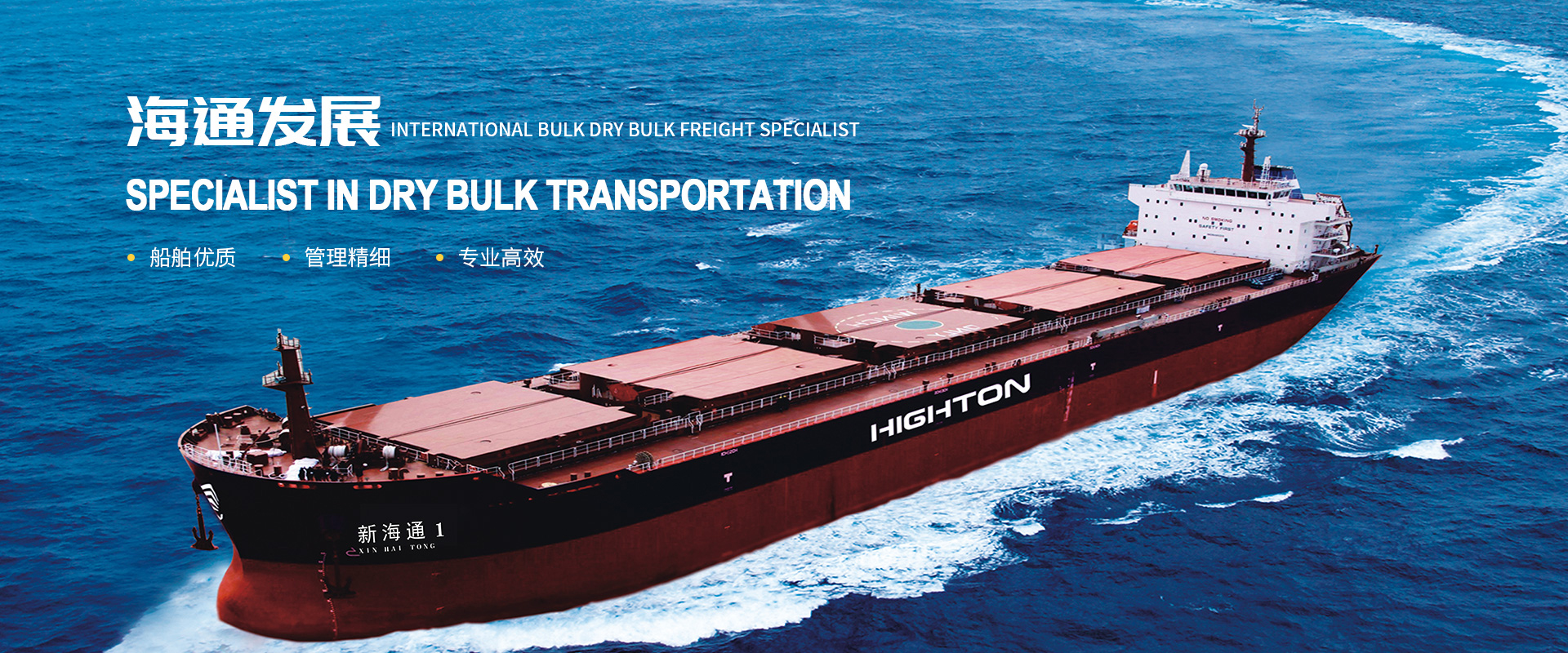

专注于细分航运行业

拉斯维加斯(官方认证网站)登录入口·Made in Las Vegas

走进拉斯维加斯

公司概况

组织架构

企业文化

新闻中心

公司消息

行业新闻

航运要闻

业务领域

境内航区运输业务

境外航区运输业务

航区船舶

运输货种

拉斯维加斯官方入口

人力资源

联系我们

拉斯维加斯(官方认证网站)登录入口

走进拉斯维加斯

公司概况

组织架构

企业文化

新闻中心

公司消息

行业新闻

航运要闻

业务领域

境内航区运输业务

境外航区运输业务

航区船舶

运输货种

拉斯维加斯官方入口

人力资源

联系我们

拉斯维加斯(官方认证网站)登录入口

福州市台江区宁化街道长汀街

福州市台江区宁化街道长汀街 0591-86291786

0591-86291786